An AI-Generated Podcast About This CLBTNR?!

Tagged:About

/

ArtificialIntelligence

/

NotableAndQuotable

/

ϜΤΦ

Google has done a very silly thing: feed them some information, and they’ll make a podcast with 2 AI hosts ‘discussing’ it. We fed them this Crummy Little Blog That Nobody Reads (CLBTNR). The results are… amusing?

They Made a What Now?

From Scott Alexander’s Astral Codex Ten November 2024 links post [1]

came a link (item #17 on Scott’s list) to

Nostalgebraist’s peculiar experience [2]

with a new ‘service’ from Google. It seems that you can feed them whatever documents you

like, and get back an audio podcast of two AI hosts ‘discussing’ the

material. [3]

From Scott Alexander’s Astral Codex Ten November 2024 links post [1]

came a link (item #17 on Scott’s list) to

Nostalgebraist’s peculiar experience [2]

with a new ‘service’ from Google. It seems that you can feed them whatever documents you

like, and get back an audio podcast of two AI hosts ‘discussing’ the

material. [3]

Nostaglebraist, after feeding it 2 of his novels, has this to say about the result:

These podcast episodes are… they’re not, uh, good. In fact, they’re terrible – so cringe-y and inane that I find them painful to listen to.

But – unlike with the “AI-generated content” of even the very recent past – the problem with this stuff isn’t that it’s unrealistic. It’s perfectly realistic. The podcasters sound like real people! Everything they say is perfectly coherent! It’s just coherently … bad.

It’s a perfect imitation of superficial, formulaic, cringe-y media commentary podcasts. The content isn’t good, but it’s a type of bad content that exists, and the AI mimics it expertly.

The badness is authentic. …

But even if no one especially likes this kind of slop, it’s highly inoffensive – palatable to everyone, not likely to confuse anyone or piss anyone off – and so it’s what we get, for now, while these companies are still cautiously testing the waters.)

… Painfully basic when it’s not actually inaccurate.

That’s about what we would expect: expertly inoffensive, and having at best a casual relationship with truth.

It’s not as though Google didn’t warn us; in tiny type at the bottom of the Notebook LM page, it says:

NotebookLM may still sometimes give inaccurate responses, so you may want to confirm any facts independently.

Ya think?!

The Acid Test

Ok, you know what’s coming next, right?

Rashly, I fed it this very CLBTNR. All of it. Here’s the summary it generated:

Summary

The provided text consists of blog posts written by a retired scientist, who describes his observations and opinions on a wide range of topics, including the 2024 US election, the COVID-19 pandemic, Russia’s invasion of Ukraine, and scientific advancements. He expresses his frustrations with the state of politics in the United States, highlighting his concerns about Republican tactics and the spread of misinformation. The blogger frequently discusses scientific data and research, using his expertise to analyze events and share insights on various topics. He also incorporates personal anecdotes and humorous observations, providing a unique and engaging perspective on the world.

Ok, I guess that’s not exactly wrong…

The original is a giant .wav file. But I’ve downloaded it for archival purposes and converted it to a much smaller .mp3 file, because I’m not stupid. Here’s the 13 minute ‘podcast’ of 2 AI-generated characters attempting to discuss this CLBTNR.

A number of things are, of course, just wrong:

- I didn’t get vaccinated in March of 2020; there were no COVID-19 vaccines in March of 2020. That was a year later in 2021.

- The blog didn’t start in February of 2020, either. Formally, it started in July 2020. (“Formally”: in the sense that the first upload was in September, but back-dated to the first day of retirement.)

Much of the rest is not wrong per se, just vapid, superficial, and inconsequential. But the imitation of cringe-inducing mainstream media talk radio or podcasting… that’s spot-on perfect.

I mean, I’m as vain as the next person, so their generally positive view of this CLBTNR made me feel sorta good… until I slapped myself silly with the fact that there’s nothing there that can even understand, let alone have a respectable opinion. I might as well feel good about my dishwasher. (It is a good dishwasher, for washing dishes. But I don’t ask it for opinions on what I read or write.)

The Weekend Conclusion

Vapid. Inconsequential. Only a casual relationship to the truth, at best. Sanitized to be almost aggressively inoffensive.

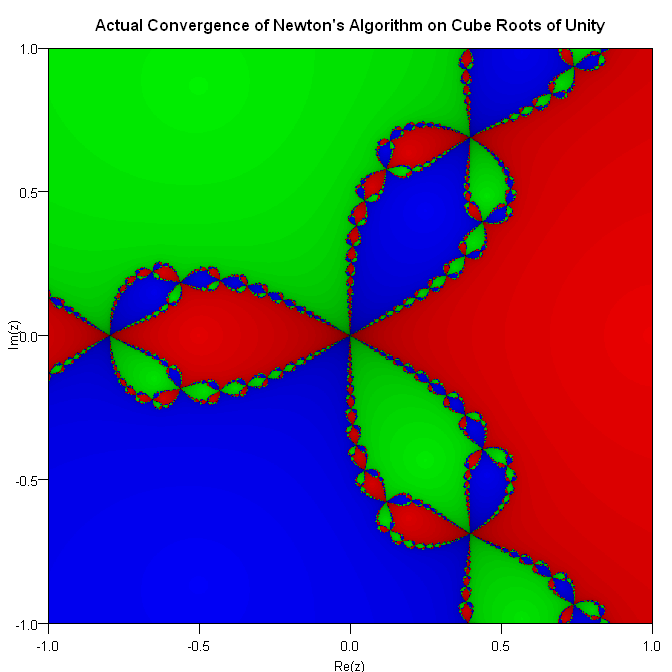

Again, look at the tuba picture above: exactly who needs this and who would be rash enough to trust the output? Even Google says it lies to us, so you have to fact-check every dang thing it says. Surely it’s less work to summarize the material for yourself, rather than listen to over-persuasive voices that occasionally lie convincingly?

(Ceterum censeo, Trump incarcerandam esse.)

Addendum 2024-Nov-02: Le Mot Juste

Pablo, responding by email, came up with the perfect description:

Feels like Muzak in spoken form.

Le mot juste, c’est celui là!

Notes & References

1: Scott Alexander Siskind, “Links For November 2024”, Astral Codex Ten blog, 2024-Nov-01.

Item #17 is the relevant bit, in case the link doesn’t take you there directly. ↩

2: ‘Nostalgebraist’, “In the Other Uncanny Valley: AI Voice News at Google”, Nostalgebraist Tumblr blog, 2024-Sep-29. ↩

3: Google Staff, “Notebook LM”, Google services, downloaded 2024-Nov-01. ↩

Gestae Commentaria

Comments for this post are closed pending repair of the comment system, but the Email/Twitter/Mastodon icons at page-top always work.